5: TDD in High Gear and Low Gear

We’ve introduced the service layer to capture some of the additional orchestration responsibilities we need from a working application. The service layer helps us clearly define our use cases and the workflow for each: what we need to get from our repositories, what pre-checks and current state validation we should do, and what we save at the end.

But currently, many of our unit tests operate at a lower level, acting directly on the model. In this chapter we’ll discuss the trade-offs involved in moving those tests up to the service-layer level, and some more general testing guidelines.

How Is Our Test Pyramid Looking?

Let’s see what this move to using a service layer, with its own service-layer tests, does to our test pyramid:

$ grep -c test_ */*/test_*.py

tests/unit/test_allocate.py:4

tests/unit/test_batches.py:8

tests/unit/test_services.py:3

tests/integration/test_orm.py:6

tests/integration/test_repository.py:2

tests/e2e/test_api.py:2Not bad! We have 15 unit tests, 8 integration tests, and just 2 end-to-end tests. That’s already a healthy-looking test pyramid.

Should Domain Layer Tests Move to the Service Layer?

Let’s see what happens if we take this a step further. Since we can test our software against the service layer, we don’t really need tests for the domain model anymore. Instead, we could rewrite all of the domain-level tests from [chapter_01_domain_model] in terms of the service layer:

# domain-layer test:

def test_prefers_current_stock_batches_to_shipments():

in_stock_batch = Batch("in-stock-batch", "RETRO-CLOCK", 100, eta=None)

shipment_batch = Batch("shipment-batch", "RETRO-CLOCK", 100, eta=tomorrow)

line = OrderLine("oref", "RETRO-CLOCK", 10)

allocate(line, [in_stock_batch, shipment_batch])

assert in_stock_batch.available_quantity == 90

assert shipment_batch.available_quantity == 100

# service-layer test:

def test_prefers_warehouse_batches_to_shipments():

in_stock_batch = Batch("in-stock-batch", "RETRO-CLOCK", 100, eta=None)

shipment_batch = Batch("shipment-batch", "RETRO-CLOCK", 100, eta=tomorrow)

repo = FakeRepository([in_stock_batch, shipment_batch])

session = FakeSession()

line = OrderLine('oref', "RETRO-CLOCK", 10)

services.allocate(line, repo, session)

assert in_stock_batch.available_quantity == 90

assert shipment_batch.available_quantity == 100Why would we want to do that?

Tests are supposed to help us change our system fearlessly, but often we see teams writing too many tests against their domain model. This causes problems when they come to change their codebase and find that they need to update tens or even hundreds of unit tests.

This makes sense if you stop to think about the purpose of automated tests. We use tests to enforce that a property of the system doesn’t change while we’re working. We use tests to check that the API continues to return 200, that the database session continues to commit, and that orders are still being allocated.

If we accidentally change one of those behaviors, our tests will break. The flip side, though, is that if we want to change the design of our code, any tests relying directly on that code will also fail.

As we get further into the book, you’ll see how the service layer forms an API for our system that we can drive in multiple ways. Testing against this API reduces the amount of code that we need to change when we refactor our domain model. If we restrict ourselves to testing only against the service layer, we won’t have any tests that directly interact with "private" methods or attributes on our model objects, which leaves us freer to refactor them.

|

Tip

|

Every line of code that we put in a test is like a blob of glue, holding the system in a particular shape. The more low-level tests we have, the harder it will be to change things. |

On Deciding What Kind of Tests to Write

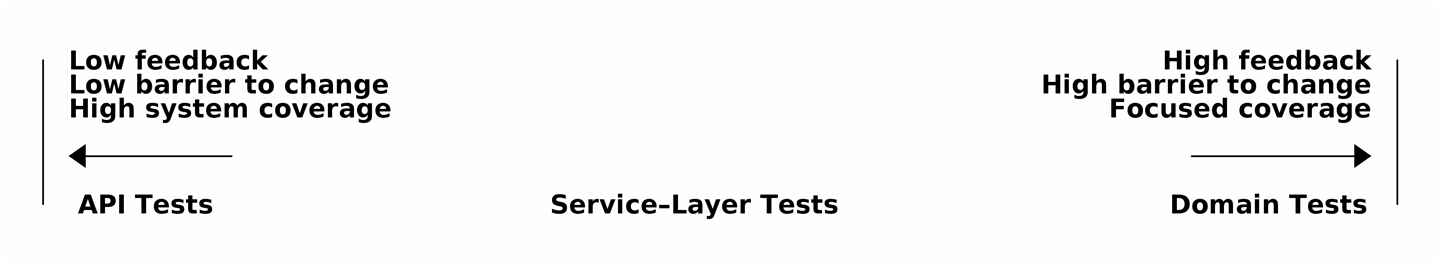

You might be asking yourself, "Should I rewrite all my unit tests, then? Is it wrong to write tests against the domain model?" To answer those questions, it’s important to understand the trade-off between coupling and design feedback (see The test spectrum).

[ditaa, apwp_0501] | Low feedback High feedback | | Low barrier to change High barrier to change | | High system coverage Focused coverage | | | | <--------- ----------> | | | | API Tests Service–Layer Tests Domain Tests |

Extreme programming (XP) exhorts us to "listen to the code." When we’re writing tests, we might find that the code is hard to use or notice a code smell. This is a trigger for us to refactor, and to reconsider our design.

We only get that feedback, though, when we’re working closely with the target code. A test for the HTTP API tells us nothing about the fine-grained design of our objects, because it sits at a much higher level of abstraction.

On the other hand, we can rewrite our entire application and, so long as we don’t change the URLs or request formats, our HTTP tests will continue to pass. This gives us confidence that large-scale changes, like changing the database schema, haven’t broken our code.

At the other end of the spectrum, the tests we wrote in [chapter_01_domain_model] helped us to flesh out our understanding of the objects we need. The tests guided us to a design that makes sense and reads in the domain language. When our tests read in the domain language, we feel comfortable that our code matches our intuition about the problem we’re trying to solve.

Because the tests are written in the domain language, they act as living documentation for our model. A new team member can read these tests to quickly understand how the system works and how the core concepts interrelate.

We often "sketch" new behaviors by writing tests at this level to see how the code might look. When we want to improve the design of the code, though, we will need to replace or delete these tests, because they are tightly coupled to a particular implementation.

High and Low Gear

Most of the time, when we are adding a new feature or fixing a bug, we don’t need to make extensive changes to the domain model. In these cases, we prefer to write tests against services because of the lower coupling and higher coverage.

For example, when writing an add_stock function or a cancel_order feature,

we can work more quickly and with less coupling by writing tests against the

service layer.

When starting a new project or when hitting a particularly gnarly problem, we will drop back down to writing tests against the domain model so we get better feedback and executable documentation of our intent.

The metaphor we use is that of shifting gears. When starting a journey, the bicycle needs to be in a low gear so that it can overcome inertia. Once we’re off and running, we can go faster and more efficiently by changing into a high gear; but if we suddenly encounter a steep hill or are forced to slow down by a hazard, we again drop down to a low gear until we can pick up speed again.

Fully Decoupling the Service-Layer Tests from the Domain

We still have direct dependencies on the domain in our service-layer tests, because we use domain objects to set up our test data and to invoke our service-layer functions.

To have a service layer that’s fully decoupled from the domain, we need to rewrite its API to work in terms of primitives.

Our service layer currently takes an OrderLine domain object:

def allocate(line: OrderLine, repo: AbstractRepository, session) -> str:How would it look if its parameters were all primitive types?

def allocate(

orderid: str, sku: str, qty: int,

repo: AbstractRepository, session

) -> str:We rewrite the tests in those terms as well:

def test_returns_allocation():

batch = model.Batch("batch1", "COMPLICATED-LAMP", 100, eta=None)

repo = FakeRepository([batch])

result = services.allocate("o1", "COMPLICATED-LAMP", 10, repo, FakeSession())

assert result == "batch1"But our tests still depend on the domain, because we still manually instantiate

Batch objects. So, if one day we decide to massively refactor how our Batch

model works, we’ll have to change a bunch of tests.

Mitigation: Keep All Domain Dependencies in Fixture Functions

We could at least abstract that out to a helper function or a fixture

in our tests. Here’s one way you could do that, adding a factory

function on FakeRepository:

class FakeRepository(set):

@staticmethod

def for_batch(ref, sku, qty, eta=None):

return FakeRepository([

model.Batch(ref, sku, qty, eta),

])

...

def test_returns_allocation():

repo = FakeRepository.for_batch("batch1", "COMPLICATED-LAMP", 100, eta=None)

result = services.allocate("o1", "COMPLICATED-LAMP", 10, repo, FakeSession())

assert result == "batch1"At least that would move all of our tests' dependencies on the domain into one place.

Adding a Missing Service

We could go one step further, though. If we had a service to add stock, we could use that and make our service-layer tests fully expressed in terms of the service layer’s official use cases, removing all dependencies on the domain:

def test_add_batch():

repo, session = FakeRepository([]), FakeSession()

services.add_batch("b1", "CRUNCHY-ARMCHAIR", 100, None, repo, session)

assert repo.get("b1") is not None

assert session.committed|

Tip

|

In general, if you find yourself needing to do domain-layer stuff directly in your service-layer tests, it may be an indication that your service layer is incomplete. |

And the implementation is just two lines:

def add_batch(

ref: str, sku: str, qty: int, eta: Optional[date],

repo: AbstractRepository, session,

) -> None:

repo.add(model.Batch(ref, sku, qty, eta))

session.commit()

def allocate(

orderid: str, sku: str, qty: int,

repo: AbstractRepository, session

) -> str:|

Note

|

Should you write a new service just because it would help remove

dependencies from your tests? Probably not. But in this case, we

almost definitely would need an add_batch service one day anyway.

|

That now allows us to rewrite all of our service-layer tests purely in terms of the services themselves, using only primitives, and without any dependencies on the model:

def test_allocate_returns_allocation():

repo, session = FakeRepository([]), FakeSession()

services.add_batch("batch1", "COMPLICATED-LAMP", 100, None, repo, session)

result = services.allocate("o1", "COMPLICATED-LAMP", 10, repo, session)

assert result == "batch1"

def test_allocate_errors_for_invalid_sku():

repo, session = FakeRepository([]), FakeSession()

services.add_batch("b1", "AREALSKU", 100, None, repo, session)

with pytest.raises(services.InvalidSku, match="Invalid sku NONEXISTENTSKU"):

services.allocate("o1", "NONEXISTENTSKU", 10, repo, FakeSession())This is a really nice place to be in. Our service-layer tests depend on only the service layer itself, leaving us completely free to refactor the model as we see fit.

Carrying the Improvement Through to the E2E Tests

In the same way that adding add_batch helped decouple our service-layer

tests from the model, adding an API endpoint to add a batch would remove

the need for the ugly add_stock fixture, and our E2E tests could be free

of those hardcoded SQL queries and the direct dependency on the database.

Thanks to our service function, adding the endpoint is easy, with just a little JSON wrangling and a single function call required:

@app.route("/add_batch", methods=["POST"])

def add_batch():

session = get_session()

repo = repository.SqlAlchemyRepository(session)

eta = request.json["eta"]

if eta is not None:

eta = datetime.fromisoformat(eta).date()

services.add_batch(

request.json["ref"],

request.json["sku"],

request.json["qty"],

eta,

repo,

session,

)

return "OK", 201|

Note

|

Are you thinking to yourself, POST to /add_batch? That’s not very RESTful! You’re quite right. We’re being happily sloppy, but if you’d like to make it all more RESTy, maybe a POST to /batches, then knock yourself out! Because Flask is a thin adapter, it’ll be easy. See the next sidebar. |

And our hardcoded SQL queries from conftest.py get replaced with some API calls, meaning the API tests have no dependencies other than the API, which is also nice:

def post_to_add_batch(ref, sku, qty, eta):

url = config.get_api_url()

r = requests.post(

f"{url}/add_batch", json={"ref": ref, "sku": sku, "qty": qty, "eta": eta}

)

assert r.status_code == 201

@pytest.mark.usefixtures("postgres_db")

@pytest.mark.usefixtures("restart_api")

def test_happy_path_returns_201_and_allocated_batch():

sku, othersku = random_sku(), random_sku("other")

earlybatch = random_batchref(1)

laterbatch = random_batchref(2)

otherbatch = random_batchref(3)

post_to_add_batch(laterbatch, sku, 100, "2011-01-02")

post_to_add_batch(earlybatch, sku, 100, "2011-01-01")

post_to_add_batch(otherbatch, othersku, 100, None)

data = {"orderid": random_orderid(), "sku": sku, "qty": 3}

url = config.get_api_url()

r = requests.post(f"{url}/allocate", json=data)

assert r.status_code == 201

assert r.json()["batchref"] == earlybatchWrap-Up

Once you have a service layer in place, you really can move the majority of your test coverage to unit tests and develop a healthy test pyramid.

A few things will help along the way:

-

Express your service layer in terms of primitives rather than domain objects.

-

In an ideal world, you’ll have all the services you need to be able to test entirely against the service layer, rather than hacking state via repositories or the database. This pays off in your end-to-end tests as well.

Onto the next chapter!